Measuring development productivity in the AI era - beyond lines of code and commits

Back in 2018 I wrote about why daily commits matter in software development matter. The response was overwhelming - both positive and critical. Some developers embraced the discipline of daily commits, while others pushed back, arguing that such metrics can become vanity numbers.

They're both right. Measuring developer productivity is a very complex topic, and it goes well beyond commits and PRs.

The Evolution of Developer Metrics

For years, tech leaders have chased the holy grail of developer productivity metrics. Lines of code? Too easy to game. Number of commits? As I discussed in my previous article, they matter - but they're just one piece of the puzzle. Story points completed? Often becomes a negotiation game rather than a measure of real progress.

Let me share a story that taught us an expensive lesson about over-relying on simple metrics.

A few years ago, we introduced daily commit frequency as one of our performance review criteria. The intention was good - we wanted to encourage continuous integration and regular code shipping. Some developers immediately embraced this practice, making it a habit to integrate their work daily while delivering small chunks of real value to the users. However others found creative ways to game the system to make their contribution graph look better.

One senior developer started breaking up their work into tiny commits. Instead of making meaningful integration points, they'd commit minor changes separately - a variable rename here, a formatting change there. Their commit graph looked amazing, a sea of green squares on GitHub. During their performance review, the numbers looked fantastic.

However, when we dug deeper, we discovered that while the commit count was high the commits were highly manipulated and there was no real value in many of them, defeating the whole point of continuous delivery.

This experience taught us that even well-intentioned metrics can be counter-productive when used in isolation. There must be a better way to think about developer productivity.

DORA Metrics: The Foundation

DevOps is a more advanced way of thinking about software development, which is very focused on team (and not individual) delivery and real outcomes. The idea is to shorten the development lifecycle while delivering high-quality software continuously to the end users as a team.

Google's DevOps Research and Assessment (DORA) team has given us four key team performance metrics that provide a solid foundation to measure team performance:

- Deployment Frequency

- Lead Time for Changes

- Change Failure Rate

- Time to Restore Service

These metrics are powerful because they measure outcomes, not just activity. When we work with dozens of clients across different industries, we've found that teams focusing on these metrics tend to deliver more value consistently.

“The best teams deploy 973x more frequently and have lead times 6750x faster when compared to low performers.” — State of DevOps Reports

But here's the catch - DORA metrics alone don't tell the whole story as they are very focused on code, infrastructure, and delivery, but not necessarily on other important human and collaboration aspects.

SPACE: The Missing Human Element

This is where the SPACE framework comes in, adding crucial dimensions that DORA doesn't capture:

- Satisfaction and well-being

- Performance

- Activity

- Communication and Collaboration

- Efficiency and flow

Working with both startups and corporations, I've seen how these human factors often matter more than pure deployment metrics. A team with perfect DORA scores but low satisfaction and poor collaboration won't sustain their performance long-term.

An example of a poor practice that could demonstrate why DORA is not enough is the "Deploy Friday" syndrome. It occurs when product managers insist on pushing major features every Friday afternoon to hit arbitrary sprint deadlines, despite the operations team's concerns about weekend support and developers' warnings about testing time. While deployment metrics might look impressive, this creates a toxic cycle of weekend firefighting, rushed testing, accumulating technical debt, and team burnout - ultimately leading to higher defect rates, slower delivery, increased costs, and talent loss. Some of this stuff might show up in DORA metrics over time or maybe not, but it creates people-management problems and risks.

Another example is the "Requirements Waterfall" trap that happens when a business analyst spends weeks or months gathering requirements in isolation, produces a 200-page specification document that no one reads, and throws it over the wall to development. The document is immediately outdated as market conditions change, lacks technical context since engineers weren't consulted, and contains contradictory requirements that weren't validated with actual users. When development inevitably hits roadblocks, there's no clear process for clarification, leading to delays, misaligned features, and finger-pointing between teams.

Many examples like this demonstrate that bad planning, communication, and collaboration practices can create an environment and context in which it becomes really hard to have a proper Development flow and a good engineering culture.

Codermetrics - An Interesting Read for Engineering Leaders

While DORA and SPACE are relatively popular, I also wanted to mention a really interesting book that is less known. Jonathan Alexander's "Codermetrics" (O'Reilly, 2011), changed how I thought about measuring developer productivity.

Unlike many books that focus purely on quantitative metrics, Alexander introduces human-centric measurements that consider both the social and technical aspects of software development. He presents practical approaches to measuring things like knowledge sharing, code stewardship, and mentorship - aspects that traditional metrics often miss.

For example, his "Collaboration Quotient" looks at team dynamics through metrics like:

- Number of successful pull request reviews

- Frequency of design document contributions

- Time spent mentoring other developers

- Cross-team project participation

It's just a small example of how Jonathan considered collaboration and communication practices that go well beyond simple outputs like lines of code or commit.

While somewhat older now, the principles in Codermetrics remain surprisingly relevant, especially its emphasis on using metrics to improve team dynamics rather than just measuring output (e.g. lines of codes and commits).

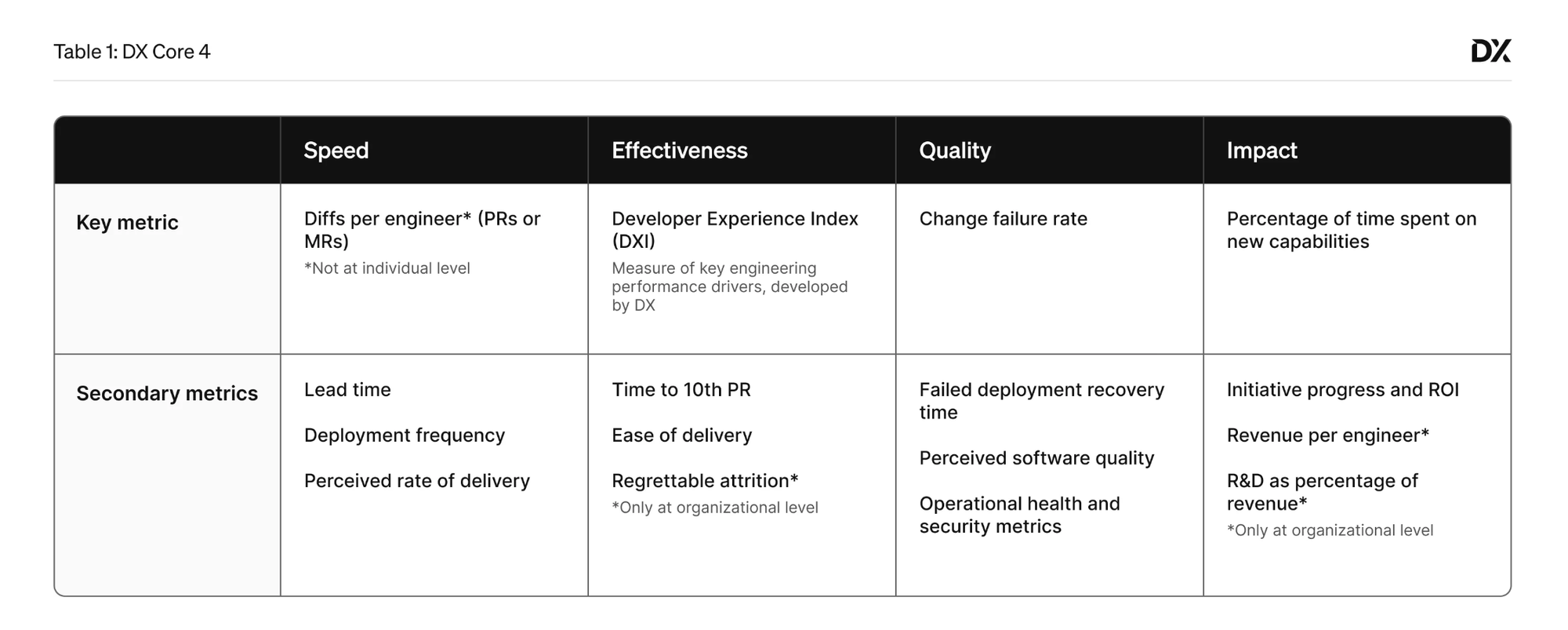

DX Core 4: Unifying Developer Productivity Measurement

To round up our review of old and current measurement systems, it is important to mention DX Core 4 - a unified framework that combines the best aspects of DORA, SPACE, and developer experience metrics. It measures four key dimensions:

- Speed - How quickly can teams deliver? (Think: PR/MR's per engineer, deployment frequency, etc.)

- Effectiveness - How well are developers able to work? (Measured through the Developer Experience Index)

- Quality - Are we shipping reliable code? (Change failure rate and recovery metrics)

- Impact - Are we delivering real value? (Time spent on new capabilities vs maintenance)

What makes DX Core 4 different is its balance. Teams that excel in all four dimensions consistently outperform those that only focus on one or two areas. This holistic approach helps organizations avoid the common trap of optimizing for speed at the expense of quality or developer experience.

The Developer Experience Index (DXI) is particularly interesting as it measures how effectively developers can do their work. It looks at factors like flow state, feedback loops, and cognitive load. Think about those days when you're in the zone, your tests run quickly, and you're shipping quality code - that's what a good DXI looks like. But it goes beyond individual productivity. The index also captures collaboration patterns: how well teams share knowledge, the quality of code reviews, and the effectiveness of technical discussions. After all, software development is a team sport.

Planning practices play a crucial role in all four dimensions of the framework. Good planning reduces cognitive load (effectiveness), enables predictable delivery (speed), prevents rushed implementations (quality), and ensures we're working on what matters (impact). The framework helps expose when planning is broken - like when stories are too large, requirements are unclear, or technical discovery is insufficient. These planning issues show up as reduced effectiveness scores, increased lead times, and lower impact metrics. By connecting planning practices to concrete metrics, DX Core 4 helps teams identify and fix process issues before they affect delivery.

The framework is designed to prevent the common pitfalls of productivity measurement by ensuring that no single metric can be gamed without affecting the others. Each dimension provides checks and balances against the others.

A critical aspect of DX Core 4 is that it works at all levels of the organization. From CEOs wanting to understand engineering efficiency to team leads looking to remove bottlenecks, these metrics provide meaningful insights without becoming vanity metrics.

Measuring Productivity in the AI Era

AI development tools like GitHub Copilot, Cursor, Claude, etc. have transformed how we think about and how we measure developer productivity. While these tools can generate large amounts of code quickly, this makes measuring developer effectiveness more important, not less.

The traditional metrics become insufficient when AI can produce hundreds of lines of code in seconds. With AI handling routine coding tasks, the human aspects of development become paramount. The most productive developers excel at breaking down complex problems, sharing effective prompting patterns, focusing on architecture/system design, and maintaining high documentation standards. Teams that thrive with AI often spend more time on design and collaboration than coding, making non-coding metrics increasingly crucial.

This shift emphasizes the importance of tracking new indicators: time spent in problem-definition discussions, quality of technical design documents, the effectiveness of knowledge sharing about AI tools, and team collaboration patterns.

The SPACE framework (Satisfaction, Performance, Activity, Communication, Efficiency) and similar holistic frameworks take on new significance as teams adapt to integrate AI into their development processes, with particular attention to how these tools impact developer satisfaction and team dynamics.

The Role of Leadership and Organizational Design

Even the best metrics frameworks fail without proper organizational support. Through scaling our company we've learned that productivity measurement must be backed by thoughtful organizational design and leadership practices.

This aligns with Google's groundbreaking research through Project Oxygen and Project Aristotle, which revealed that team effectiveness isn't primarily about individual performance metrics or technical expertise. Their research found that psychological safety, dependability, and structure/clarity were far more critical to team success than individual performance measures.

Leaders need to create environments where productive behaviors can flourish. This means:

- Designing team structures that promote collaboration over competition

- Aligning incentives with desired outcomes, not just measurable outputs

- Building psychological safety so teams can experiment and fail safely (Google's research found this to be the #1 predictor of team success)

- Creating clear paths for career progression that don't rely solely on quantitative metrics

- Establishing feedback loops between development teams and business stakeholders

We've seen cases where teams with perfect DORA metrics struggled because of organizational silos, while teams with "worse" metrics delivered more value due to better alignment with business goals and stronger cross-functional collaboration. Google's research found that successful teams need "structure and clarity" - with clear roles, plans, and goals. Their findings showed that high-performing teams have well-defined expectations and understand how their work contributes to the organization's broader objectives.

The most successful organizations we work with treat productivity measurement as part of a broader system that includes organizational design, leadership development, and culture building. They understand that metrics are tools for improvement, not weapons for enforcement - a principle that echoes Google's findings about the importance of creating a culture of trust and continuous learning.

Balancing Multiple Frameworks

This article's goal is not to prescribe a specific solution but to prove that there is a need to evaluate many different metrics and to look at software engineering more holistically.

The key is not to choose between DORA, SPACE, or other metrics, but to use them in combination and to learn from them:

- Review DORA, SPACE, and DXCore metrics to find an optimal set of metrics that will give you a holistic picture of dev productivity

- Consider human factors from Codermetrics and Google Rework like proper team structures, culture, knowledge sharing, and mentorship

- Add context-specific metrics that matter for your specific team and projects and focus on outcomes and actual value delivered to users/customers

Need help designing a holistic productivity strategy?

Conclusion / Moving Forward

As leaders, we need to resist the urge to reduce developer productivity to simple numbers. This becomes even more crucial in the AI era. Yes, metrics like daily commits have their place - but they should be conversation starters, not conclusions.

The next time you're tempted to measure productivity by counting lines of code or commits, step back and ask: Are we measuring what matters, or just what's easy to measure?

Real productivity in software development isn't about how much you produce - it's about how much value you create for a customer. Sometimes, the most valuable thing a developer can do is delete code, improve documentation, or help a colleague understand a complex system.

Some things are harder to measure, and we should focus on outcomes over outputs whenever possible and focus on the team over the individual.

Further Reading

For those interested in diving deeper into this topic, I recommend: